a closer look at output

estimated read time: 7-9 minutesLots of people have used the OUTPUT clause in an INSERT/UPDATE/DELETE statement, but many may not know just how flexible it is, and what hidden gems there are in the documentation. In this post we are going to look at two interesting and surprising use cases for the OUTPUT clause.

The Basics

For those of you that might not know how OUTPUT works, let's walk through a quick demo:

CREATE DATABASE OutputDemo;

GO

USE OutputDemo;

GO

-- Big table of GUIDs

CREATE TABLE MyGuid (

MyGuidID INT IDENTITY(1,1),

GUID UNIQUEIDENTIFIER

);

-- GUID change log

CREATE TABLE MyGuid_Log (

MyGuid_LogID INT IDENTITY(1,1),

MyGuidID INT,

GUID UNIQUEIDENTIFIER,

ChangeDate DATETIME DEFAULT GETUTCDATE()

);

In the script we create a test database, create a table MyGuid to store some data, and also a log table MyGuid_Log to store historical records on what was changed in our table. At this point you have a few options to get the log table populated. You could implement a trigger on MyGuid that inserts records into the log, but you could also use the OUTPUT clause:

-- Insert 10000 records, log the records in the log table

INSERT INTO MyGuid ( GUID )

--== Insert the records into the log table as well

OUTPUT Inserted.* INTO MyGuid_Log ( MyGuidID,GUID )

SELECT TOP(10000)

NEWID()

FROM sys.all_columns AS c1

CROSS JOIN sys.all_columns AS c2;

-- Update records 100-1000

UPDATE MyGuid

SET GUID = NEWID()

--== Insert the newly updated records into the log table

OUTPUT Inserted.* INTO MyGuid_Log ( MyGuidID,GUID )

WHERE MyGuidID >= 100

AND MyGuidID <= 1000;

-- Delete Records 900-1000

DELETE FROM MyGuid

--== Insert the newly updated records into the log table

OUTPUT Deleted.* INTO MyGuid_Log ( MyGuidID,GUID )

WHERE MyGuid >= 900

AND MyGuid <= 1000;

Based on this code, all 10,000 inserts will also be inserted into the MyGuid_Log table as well as the newly updated records. We are accessing the new/updated records using the Inserted psuedo-table provided by the OUTPUT clause. This is similar to how you would interact with records in a trigger. When updating and deleting records you also have access to the Deleted psuedo-table, allowing you to log both the current and previous state of a record if desired. It's also important to note that you can insert into a table variable, or remove the INTO portion of the statement completely and just return the output as a result set to be consumed.

"Tee" Time

A common command in the Linux world is the tee command. What tee allows you to do is pipe the output of a command to a file as well as the console. This same functionality can be implemented using multiple OUTPUT clauses in a T-SQL statement. In this example we are going to update a few hundred records. When the update statement is run, not only will it update the MyGuid table but it will update a log table and also return the result of the update. This is accomplished by using two OUTPUT clauses.

USE OutputDemo;

GO

-- Update records 100-1000

UPDATE MyGuid

SET GUID = NEWID()

--== Insert records into a log table

OUTPUT Inserted.* INTO MyGuid_Log ( MyGuidID, GUID )

--== Also return the updated records as a result set

OUTPUT Inserted.*

WHERE MyGuidID >= 100

AND MyGuidID <= 1000;

This can be useful in cases where you need to do some logging but also need to know what records were just altered by a statement. In my example I am selecting * from Inserted, but you could just as easily select the ID column only (or any column you need), and return that to the app making the call.

SELECT FROM ... UPDATE?

While the "tee" functionality is useful, this next behavior took me by surprise. In this example we are going to illustrate how to nest an UPDATE statement within an INSERT statement as a sub-query. This feature of the OUTPUT clause is discussed in Microsoft Docs, but because they chose to illustrate the concept using a MERGE statement, it isn't immediately obvious what it's doing. In this script we are going to be working with some product inventory information. We will create an ItemQty table to store item quantity information, an Item table that store item details (just a name in this case), and an Item_History table to store changes to an item.

USE OutputDemo;

GO

CREATE TABLE Item (

ItemID INT IDENTITY(1,1),

ItemName NVARCHAR(128)

)

CREATE TABLE ItemQty (

ItemID INT,

Qty INT

);

CREATE TABLE ItemQty_History (

ItemId INT,

ItemName NVARCHAR(128),

OldQty INT,

NewQty INT,

ChangeDate DATETIME DEFAULT(GETUTCDATE())

);

Now we are going to populate our Item table with a few random string values (GUIDs), and then we will insert some item quantity data based on these items. We are going to use a "tally table" in this example just to generate some rows.

WITH lv0 AS (SELECT 0 g UNION ALL SELECT 0)

,lv1 AS (SELECT 0 g FROM lv0 a CROSS JOIN lv0 b) -- 4

,lv2 AS (SELECT 0 g FROM lv1 a CROSS JOIN lv1 b) -- 16

,lv3 AS (SELECT 0 g FROM lv2 a CROSS JOIN lv2 b) -- 256

,lv4 AS (SELECT 0 g FROM lv3 a CROSS JOIN lv3 b) -- 65,536

,lv5 AS (SELECT 0 g FROM lv4 a CROSS JOIN lv4 b) -- 4,294,967,296

,Tally (n) AS (SELECT ROW_NUMBER() OVER (ORDER BY (SELECT NULL)) FROM lv5)

INSERT INTO Item (ItemName)

SELECT TOP(1000)

NEWID()

FROM Tally;

GO

INSERT INTO ItemQty ( ItemID, Qty)

SELECT ItemID,

ABS(BINARY_CHECKSUM(NEWID())) % 1000 --= Generate a random number between 0-999

FROM Item;

GO

So far we have an Item table full of some fake items, and an ItemQty table full of item quantity values. Now we want to run a statement to update quantity, but we need to make sure that change gets logged in our history table. When we created the history table we included the Item.ItemName column, as well as the old and new values for ItemQty.Qty. Using a single T-SQL statement, and no triggers, we can update quantity while also inserting data from two different tables into a history table. Not only are we going to combine data from two tables, but we'll also be making sure we are only inserting data when the quantity was actually changed. If the old and new quantity values are the same, nothing will be inserted.

INSERT INTO dbo.ItemQty_History

(

ItemId,

ItemName,

OldQty,

NewQty

)

SELECT NewData.ItemId,

NewData.ItemName,

NewData.OldQty,

NewData.NewQty

FROM (

--== Treat an UPDATE as a sub-query, using OUTPUT

UPDATE iq

SET Qty = 1

OUTPUT Inserted.ItemID,

Item.ItemName,

Deleted.Qty AS OldQty,

Inserted.Qty AS NewQty

FROM dbo.ItemQty AS iq

INNER JOIN dbo.Item

ON iq.ItemID = Item.ItemID

WHERE Qty > 100

--== Now we alias the OUTPUT as 'NewData'

) AS NewData ( ItemId, ItemName, OldQty, NewQty )

WHERE NewData.OldQty <> NewData.NewQty; --== A where clause applied to the output of the UPDATE

Since we are treating the output of the UPDATE statement as a table, we can filter that output, making sure we only get records that have changed. If we look at our history table, we should now see records where the quantity was changed:

SELECT * FROM dbo.ItemQty_History;

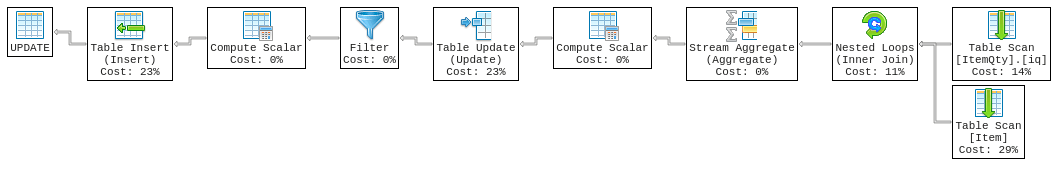

One of the more interesting things about this method is the query plan. Based on some analysis using live query stats, the plan seems to stream the records into the history table as the updates occur. There are no intermediate objects created here, so unless your use case forces a merge join or another operation requiring sorting, your tempdb usage should be minimal. Here is the plan on my system (using SQL Operations Studio running against a SQL on Linux container):

Final Thoughts

Like anything else in SQL Server, you need to weigh the benefits of using any of the code above with how unfamiliar it might be to people that have to maintain it. With proper commenting you should be able to make it clear what you are trying to accomplish. There are a few restrictions when using OUTPUT, so I would suggest reading through the full documentation to make sure you fully understand what you are getting into. Along with a full explanation of the restrictions, there are also a few other neat use cases proposed, like a queue implemented using DELETE TOP(1) and OUTPUT.